Master the AWS-Certified-Machine-Learning-Specialty AWS Certified Machine Learning - Specialty content and be ready for exam day success quickly with this Passleader AWS-Certified-Machine-Learning-Specialty free question. We guarantee it!We make it a reality and give you real AWS-Certified-Machine-Learning-Specialty questions in our Amazon AWS-Certified-Machine-Learning-Specialty braindumps.Latest 100% VALID Amazon AWS-Certified-Machine-Learning-Specialty Exam Questions Dumps at below page. You can use our Amazon AWS-Certified-Machine-Learning-Specialty braindumps and pass your exam.

Free demo questions for Amazon AWS-Certified-Machine-Learning-Specialty Exam Dumps Below:

NEW QUESTION 1

A machine learning specialist needs to analyze comments on a news website with users across the globe. The specialist must find the most discussed topics in the comments that are in either English or Spanish.

What steps could be used to accomplish this task? (Choose two.)

- A. Use an Amazon SageMaker BlazingText algorithm to find the topics independently from language.Proceed with the analysis.

- B. Use an Amazon SageMaker seq2seq algorithm to translate from Spanish to English, if necessar

- C. Use aSageMaker Latent Dirichlet Allocation (LDA) algorithm to find the topics.

- D. Use Amazon Translate to translate from Spanish to English, if necessar

- E. Use Amazon Comprehend topic modeling to find the topics.

- F. Use Amazon Translate to translate from Spanish to English, if necessar

- G. Use Amazon Lex to extract topics form the content.

- H. Use Amazon Translate to translate from Spanish to English, if necessar

- I. Use Amazon SageMaker Neural Topic Model (NTM) to find the topics.

Answer: B

NEW QUESTION 2

A Data Scientist needs to create a serverless ingestion and analytics solution for high-velocity, real-time streaming data.

The ingestion process must buffer and convert incoming records from JSON to a query-optimized, columnar format without data loss. The output datastore must be highly available, and Analysts must be able to run SQL queries against the data and connect to existing business intelligence dashboards.

Which solution should the Data Scientist build to satisfy the requirements?

- A. Create a schema in the AWS Glue Data Catalog of the incoming data forma

- B. Use an Amazon Kinesis Data Firehose delivery stream to stream the data and transform the data to Apache Parquet or ORC format using the AWS Glue Data Catalog before delivering to Amazon S3. Have the Analysts query the data directly from Amazon S3 using Amazon Athena, and connect to Bl tools using the Athena Java Database Connectivity (JDBC) connector.

- C. Write each JSON record to a staging location in Amazon S3. Use the S3 Put event to trigger an AWS Lambda function that transforms the data into Apache Parquet or ORC format and writes the data to a processed data location in Amazon S3. Have the Analysts query the data directly from Amazon S3 using Amazon Athena, and connect to Bl tools using the Athena Java Database Connectivity (JDBC) connector.

- D. Write each JSON record to a staging location in Amazon S3. Use the S3 Put event to trigger an AWS Lambda function that transforms the data into Apache Parquet or ORC format and inserts it into an Amazon RDS PostgreSQL databas

- E. Have the Analysts query and run dashboards from the RDS database.

- F. Use Amazon Kinesis Data Analytics to ingest the streaming data and perform real-time SQL queries to convert the records to Apache Parquet before delivering to Amazon S3. Have the Analysts query the data directly from Amazon S3 using Amazon Athena and connect to Bl tools using the Athena Java Database Connectivity (JDBC) connector.

Answer: A

NEW QUESTION 3

A company is building a predictive maintenance model based on machine learning (ML). The data is stored in a fully private Amazon S3 bucket that is encrypted at rest with AWS Key Management Service (AWS KMS) CMKs. An ML specialist must run data preprocessing by using an Amazon SageMaker Processing job that is triggered from code in an Amazon SageMaker notebook. The job should read data from Amazon S3, process it, and upload it back to the same S3 bucket. The preprocessing code is stored in a container image in Amazon Elastic Container Registry (Amazon ECR). The ML specialist needs to grant permissions to ensure a smooth data preprocessing workflow.

Which set of actions should the ML specialist take to meet these requirements?

- A. Create an IAM role that has permissions to create Amazon SageMaker Processing jobs, S3 read and write access to the relevant S3 bucket, and appropriate KMS and ECR permission

- B. Attach the role to the SageMaker notebook instanc

- C. Create an Amazon SageMaker Processing job from the notebook.

- D. Create an IAM role that has permissions to create Amazon SageMaker Processing job

- E. Attach the role to the SageMaker notebook instanc

- F. Create an Amazon SageMaker Processing job with an IAM role that has read and write permissions to the relevant S3 bucket, and appropriate KMS and ECR permissions.

- G. Create an IAM role that has permissions to create Amazon SageMaker Processing jobs and to access Amazon EC

- H. Attach the role to the SageMaker notebook instanc

- I. Set up both an S3 endpoint and a KMS endpoint in the default VP

- J. Create Amazon SageMaker Processing jobs from the notebook.

- K. Create an IAM role that has permissions to create Amazon SageMaker Processing job

- L. Attach the role to the SageMaker notebook instanc

- M. Set up an S3 endpoint in the default VP

- N. Create Amazon SageMaker Processing jobs with the access key and secret key of the IAM user with appropriate KMS and ECR permissions.

Answer: D

NEW QUESTION 4

A company has raw user and transaction data stored in AmazonS3 a MySQL database, and Amazon RedShift A Data Scientist needs to perform an analysis by joining the three datasets from Amazon S3, MySQL, and Amazon RedShift, and then calculating the average-of a few selected columns from the joined data

Which AWS service should the Data Scientist use?

- A. Amazon Athena

- B. Amazon Redshift Spectrum

- C. AWS Glue

- D. Amazon QuickSight

Answer: A

NEW QUESTION 5

A Data Engineer needs to build a model using a dataset containing customer credit card information. How can the Data Engineer ensure the data remains encrypted and the credit card information is secure?

- A. Use a custom encryption algorithm to encrypt the data and store the data on an Amazon SageMaker instance in a VP

- B. Use the SageMaker DeepAR algorithm to randomize the credit card numbers.

- C. Use an IAM policy to encrypt the data on the Amazon S3 bucket and Amazon Kinesis to automatically discard credit card numbers and insert fake credit card numbers.

- D. Use an Amazon SageMaker launch configuration to encrypt the data once it is copied to the SageMaker instance in a VP

- E. Use the SageMaker principal component analysis (PCA) algorithm to reduce the length of the credit card numbers.

- F. Use AWS KMS to encrypt the data on Amazon S3 and Amazon SageMaker, and redact the credit card numbers from the customer data with AWS Glue.

Answer: D

NEW QUESTION 6

A Data Scientist wants to gain real-time insights into a data stream of GZIP files. Which solution would allow the use of SQL to query the stream with the LEAST latency?

- A. Amazon Kinesis Data Analytics with an AWS Lambda function to transform the data.

- B. AWS Glue with a custom ETL script to transform the data.

- C. An Amazon Kinesis Client Library to transform the data and save it to an Amazon ES cluster.

- D. Amazon Kinesis Data Firehose to transform the data and put it into an Amazon S3 bucket.

Answer: A

NEW QUESTION 7

A Machine Learning Specialist is given a structured dataset on the shopping habits of a company’s customer base. The dataset contains thousands of columns of data and hundreds of numerical columns for each customer. The Specialist wants to identify whether there are natural groupings for these columns across all customers and visualize the results as quickly as possible.

What approach should the Specialist take to accomplish these tasks?

- A. Embed the numerical features using the t-distributed stochastic neighbor embedding (t-SNE) algorithm and create a scatter plot.

- B. Run k-means using the Euclidean distance measure for different values of k and create an elbow plot.

- C. Embed the numerical features using the t-distributed stochastic neighbor embedding (t-SNE) algorithm and create a line graph.

- D. Run k-means using the Euclidean distance measure for different values of k and create box plots for each numerical column within each cluster.

Answer: B

NEW QUESTION 8

A Data Science team is designing a dataset repository where it will store a large amount of training data commonly used in its machine learning models. As Data Scientists may create an arbitrary number of new datasets every day the solution has to scale automatically and be cost-effective. Also, it must be possible to explore the data using SQL.

Which storage scheme is MOST adapted to this scenario?

- A. Store datasets as files in Amazon S3.

- B. Store datasets as files in an Amazon EBS volume attached to an Amazon EC2 instance.

- C. Store datasets as tables in a multi-node Amazon Redshift cluster.

- D. Store datasets as global tables in Amazon DynamoDB.

Answer: A

NEW QUESTION 9

A Machine Learning Specialist wants to bring a custom algorithm to Amazon SageMaker. The Specialist implements the algorithm in a Docker container supported by Amazon SageMaker.

How should the Specialist package the Docker container so that Amazon SageMaker can launch the training correctly?

- A. Modify the bash_profile file in the container and add a bash command to start the training program

- B. Use CMD config in the Dockerfile to add the training program as a CMD of the image

- C. Configure the training program as an ENTRYPOINT named train

- D. Copy the training program to directory /opt/ml/train

Answer: B

NEW QUESTION 10

A company is using Amazon Textract to extract textual data from thousands of scanned text-heavy legal documents daily. The company uses this information to process loan applications automatically. Some of the documents fail business validation and are returned to human reviewers, who investigate the errors. This activity increases the time to process the loan applications.

What should the company do to reduce the processing time of loan applications?

- A. Configure Amazon Textract to route low-confidence predictions to Amazon SageMaker Ground Truth.Perform a manual review on those words before performing a business validation.

- B. Use an Amazon Textract synchronous operation instead of an asynchronous operation.

- C. Configure Amazon Textract to route low-confidence predictions to Amazon Augmented AI (AmazonA2I). Perform a manual review on those words before performing a business validation.

- D. Use Amazon Rekognition's feature to detect text in an image to extract the data from scanned images.Use this information to process the loan applications.

Answer: C

NEW QUESTION 11

A financial services company wants to adopt Amazon SageMaker as its default data science environment. The company's data scientists run machine learning (ML) models on confidential financial data. The company is worried about data egress and wants an ML engineer to secure the environment.

Which mechanisms can the ML engineer use to control data egress from SageMaker? (Choose three.)

- A. Connect to SageMaker by using a VPC interface endpoint powered by AWS PrivateLink.

- B. Use SCPs to restrict access to SageMaker.

- C. Disable root access on the SageMaker notebook instances.

- D. Enable network isolation for training jobs and models.

- E. Restrict notebook presigned URLs to specific IPs used by the company.

- F. Protect data with encryption at rest and in transi

- G. Use AWS Key Management Service (AWS KMS) to manage encryption keys.

Answer: BDE

Explanation:

https://aws.amazon.com/blogs/machine-learning/millennium-management-secure-machine-learning-using-amaz

NEW QUESTION 12

A retail company is using Amazon Personalize to provide personalized product recommendations for its customers during a marketing campaign. The company sees a significant increase in sales of recommended items to existing customers immediately after deploying a new solution version, but these sales decrease a short time after deployment. Only historical data from before the marketing campaign is available for training.

How should a data scientist adjust the solution?

- A. Use the event tracker in Amazon Personalize to include real-time user interactions.

- B. Add user metadata and use the HRNN-Metadata recipe in Amazon Personalize.

- C. Implement a new solution using the built-in factorization machines (FM) algorithm in Amazon SageMaker.

- D. Add event type and event value fields to the interactions dataset in Amazon Personalize.

Answer: A

NEW QUESTION 13

A company provisions Amazon SageMaker notebook instances for its data science team and creates Amazon VPC interface endpoints to ensure communication between the VPC and the notebook instances. All connections to the Amazon SageMaker API are contained entirely and securely using the AWS network. However, the data science team realizes that individuals outside the VPC can still connect to the notebook instances across the internet.

Which set of actions should the data science team take to fix the issue?

- A. Modify the notebook instances' security group to allow traffic only from the CIDR ranges of the VP

- B. Apply this security group to all of the notebook instances' VPC interfaces.

- C. Create an IAM policy that allows the sagemaker:CreatePresignedNotebooklnstanceUrl and sagemaker:DescribeNotebooklnstance actions from only the VPC endpoint

- D. Apply this policy to all IAM users, groups, and roles used to access the notebook instances.

- E. Add a NAT gateway to the VP

- F. Convert all of the subnets where the Amazon SageMaker notebook instances are hosted to private subnet

- G. Stop and start all of the notebook instances to reassign only private IP addresses.

- H. Change the network ACL of the subnet the notebook is hosted in to restrict access to anyone outside the VPC.

Answer: B

NEW QUESTION 14

A Machine Learning Specialist is building a prediction model for a large number of features using linear models, such as linear regression and logistic regression During exploratory data analysis the Specialist observes that many features are highly correlated with each other This may make the model unstable

What should be done to reduce the impact of having such a large number of features?

- A. Perform one-hot encoding on highly correlated features

- B. Use matrix multiplication on highly correlated features.

- C. Create a new feature space using principal component analysis (PCA)

- D. Apply the Pearson correlation coefficient

Answer: B

NEW QUESTION 15

A Machine Learning Specialist working for an online fashion company wants to build a data ingestion solution for the company's Amazon S3-based data lake.

The Specialist wants to create a set of ingestion mechanisms that will enable future capabilities comprised of:

• Real-time analytics

• Interactive analytics of historical data

• Clickstream analytics

• Product recommendations

Which services should the Specialist use?

- A. AWS Glue as the data dialog; Amazon Kinesis Data Streams and Amazon Kinesis Data Analytics for real-time data insights; Amazon Kinesis Data Firehose for delivery to Amazon ES for clickstream analytics; Amazon EMR to generate personalized product recommendations

- B. Amazon Athena as the data catalog; Amazon Kinesis Data Streams and Amazon Kinesis Data Analytics for near-realtime data insights; Amazon Kinesis Data Firehose for clickstream analytics; AWS Glue to generate personalized product recommendations

- C. AWS Glue as the data catalog; Amazon Kinesis Data Streams and Amazon Kinesis Data Analytics for historical data insights; Amazon Kinesis Data Firehose for delivery to Amazon ES for clickstream analytics; Amazon EMR to generate personalized product recommendations

- D. Amazon Athena as the data catalog; Amazon Kinesis Data Streams and Amazon Kinesis Data Analytics for historical data insights; Amazon DynamoDB streams for clickstream analytics; AWS Glue to generate personalized product recommendations

Answer: A

NEW QUESTION 16

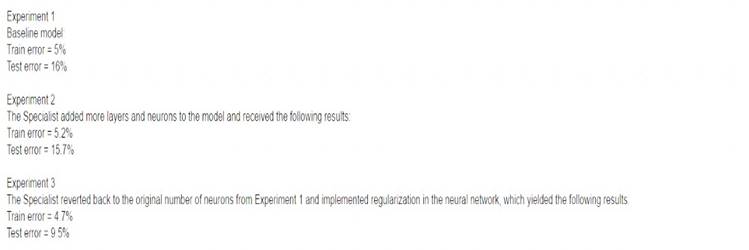

An Machine Learning Specialist discover the following statistics while experimenting on a model.

What can the Specialist from the experiments?

- A. The model In Experiment 1 had a high variance error lhat was reduced in Experiment 3 by regularization Experiment 2 shows that there is minimal bias error in Experiment 1

- B. The model in Experiment 1 had a high bias error that was reduced in Experiment 3 by regularization Experiment 2 shows that there is minimal variance error in Experiment 1

- C. The model in Experiment 1 had a high bias error and a high variance error that were reduced in Experiment 3 by regularization Experiment 2 shows thai high bias cannot be reduced by increasing layers and neurons in the model

- D. The model in Experiment 1 had a high random noise error that was reduced in Expenment 3 by regularization Expenment 2 shows that random noise cannot be reduced by increasing layers and neurons in the model

Answer: C

NEW QUESTION 17

A trucking company is collecting live image data from its fleet of trucks across the globe. The data is growing rapidly and approximately 100 GB of new data is generated every day. The company wants to explore machine learning uses cases while ensuring the data is only accessible to specific IAM users.

Which storage option provides the most processing flexibility and will allow access control with IAM?

- A. Use a database, such as Amazon DynamoDB, to store the images, and set the IAM policies to restrict access to only the desired IAM users.

- B. Use an Amazon S3-backed data lake to store the raw images, and set up the permissions using bucket policies.

- C. Setup up Amazon EMR with Hadoop Distributed File System (HDFS) to store the files, and restrictaccess to the EMR instances using IAM policies.

- D. Configure Amazon EFS with IAM policies to make the data available to Amazon EC2 instances owned by the IAM users.

Answer: C

NEW QUESTION 18

A Machine Learning Specialist uploads a dataset to an Amazon S3 bucket protected with server-side encryption using AWS KMS.

How should the ML Specialist define the Amazon SageMaker notebook instance so it can read the same dataset from Amazon S3?

- A. Define security group(s) to allow all HTTP inbound/outbound traffic and assign those security group(s) tothe Amazon SageMaker notebook instance.

- B. onfigure the Amazon SageMaker notebook instance to have access to the VP

- C. Grant permission in the KMS key policy to the notebook’s KMS role.

- D. Assign an IAM role to the Amazon SageMaker notebook with S3 read access to the datase

- E. Grant permission in the KMS key policy to that role.

- F. Assign the same KMS key used to encrypt data in Amazon S3 to the Amazon SageMaker notebook instance.

Answer: D

NEW QUESTION 19

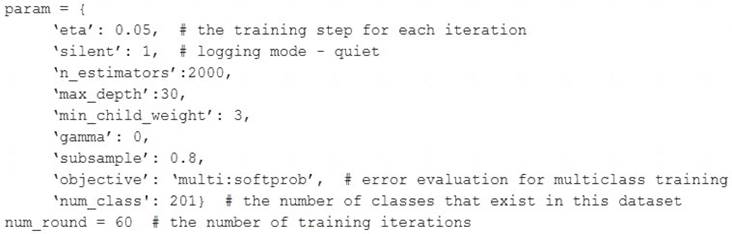

A Machine Learning Specialist is assigned to a Fraud Detection team and must tune an XGBoost model, which is working appropriately for test data. However, with unknown data, it is not working as expected. The existing parameters are provided as follows.

Which parameter tuning guidelines should the Specialist follow to avoid overfitting?

- A. Increase the max_depth parameter value.

- B. Lower the max_depth parameter value.

- C. Update the objective to binary:logistic.

- D. Lower the min_child_weight parameter value.

Answer: B

NEW QUESTION 20

......

P.S. Easily pass AWS-Certified-Machine-Learning-Specialty Exam with 307 Q&As Surepassexam Dumps & pdf Version, Welcome to Download the Newest Surepassexam AWS-Certified-Machine-Learning-Specialty Dumps: https://www.surepassexam.com/AWS-Certified-Machine-Learning-Specialty-exam-dumps.html (307 New Questions)